Paper Reading - Grok-1

开源大模型 Grok-1

基本参数:

- Parameters: 314B

- Architecture: Mixture of 8 Experts (MoE) 混合专家模型

- Experts Utilization: 2 experts used per token

- Layers: 64

- Attention Heads: 48 for queries, 8 for keys/values

- Embedding Size: 6,144

- Tokenization: SentencePiece tokenizer with 131,072 tokens

- Additional Features:

- Rotary embeddings (RoPE)

- Supports activation sharding and 8-bit quantization

- Maximum Sequence Length (context): 8,192 tokens

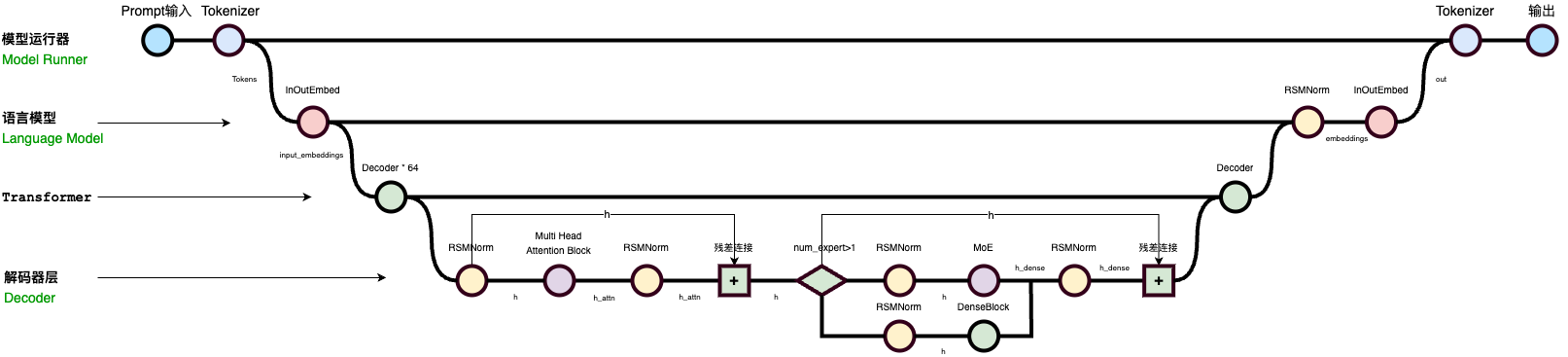

项目架构:

- run.py -> runner.py -> checkpoint.py -> model.py

- run.py(控制参数配置和输入输出): params -> TransforerConfig() -> LanguageModelConfig() -> ModelRunner() -> InferenceRunner() -> initialize() -> run() -> sample_of_model() -> output

- runner.py(模型加载和推理): ModelRunner, InferenceRunner

- checkpoint.py: 权重加载

- model.py(主要模型Transformer): InOutEmbed + Transformer

模型架构:

- 目前看完了上层架构,多层注意力和混合专家后续更。

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 Golden Arc!